Note: This is documentation for version 5.0 of Source. For a different version of Source, select the relevant space by using the Spaces menu in the toolbar above

Regression testing

The intent of regression testing is to ensure you know when changes to the software change results in models. A regression test is a project file that has been converted to a format that saves the results for the selected scenarios as 'baseline' results, along with the project. You can then run the regression test in later Source versions, and Source will notify you if the results differ from the baseline results.

Regression tests ensure the following:

- Changes (such as bug fixes) in Source do not introduce additional problems;

- Old projects are compatible with future versions of Source; and

- Correct results are not inadvertently altered because of software changes.

You can create and run local regression tests, and it is good practice to do so for your models before you upgrade to newer versions of Source. Your projects can be included in the eWater Regression Test Suite, please contact eWater if interested.

Building regression tests

The first steps are to set up the test project, create results to act as the baseline for the regression test and then build the test using the Test Builder.

- Open or create a project with the scenarios that you want to test

- For each scenario that you wish to test:

- Before running, ensure that you are recording only the parameters relevant to your regression test. It is important to only have a small number of recorders turned on such as storage levels or the volume at the outlet node.

- Configure and run your scenario

- Ensure that all the recorded results are giving you the answers you expect

- Open the Test Builder (Figure 1) using Tools » Regression Tests » Test Builder

- Click on the ellipsis button (…) and navigate to the folder where you want to save your baseline data and test project for the regression test. Click Select Folder.

- Click Save to save the folder location or the ellipsis button to load a different folder.

Once you save, the Test Builder will create a sub-folder with the same name as your project that contains:

- Sub-folders for each scenario within your project called S01, S02, … etc. Each of these folders contain *.csv files, which are the recorded results for that scenario. These are the baseline files for your regression test comparison, that is, your expected results; and

- A Source project file that the Test Runner loads and runs to compare to the baseline files.

- A Plugins.csv that lists all plugins currently installed in Source, regardless of whether they are used by your test project. When you run your regression test in a later version of Source, you need to have all plugins listed in this file installed. Therefore, if your test project does not use some of the plugins, you can manually edit the Plugins.csv, deleting the rows listing the plugins that are not used. If no plugins are used by your test project, you can delete the Plugins.csv file.

Figure 1. Regression tests, Test Builder

Running regression tests

You use Test Runner to run regression tests on your previously created test projects. You do this with a more recent version of Source than the one in which you created your test project. For example, you could run a regression test comparing the results generated by the last production release and the most recent beta version.

To run a regression test:

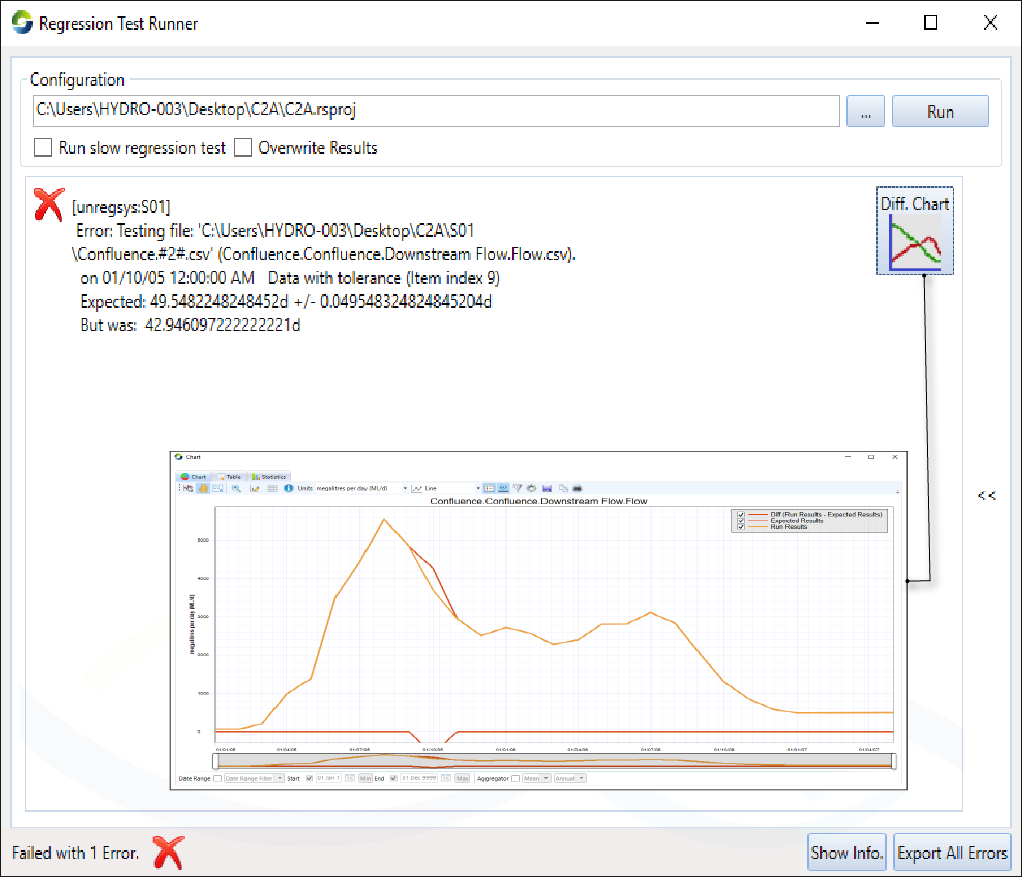

- Choose Tools » Regression tests » Test Runner to open the dialog shown in Figure 7;

- Click on the ellipsis button to load a test project; and

- Click Run to run the scenario(s) in the test project.

Once a regression test is run any errors are displayed in the Test Runner window. To graphically compare differences in results, click on the Diff. Chart button. Click the Hide Info. button to toggle off or on the display of information on the regression test run. The Export All Errors button allows you to save the errors and information to a file.

The Run slow regression test option is for debugging by developers, including plugin writers. By default, a standard regression test is performed; the project is loaded, run, and the results of each scenario compared to baseline. In the slow regression test, the steps of a standard regression test are run multiple times and combined with copy scenario and import scenario to check that all parameters and inputs are loaded, copied, imported, saved and reset correctly at the beginning of each run.

Figure 7. Regression tests, Test Runner

Troubleshooting regression tests

If your regression test fails, read the error message(s) and look at the release notes and regression test changes for releases between the version of Source in which you created your test project, and the one in which you ran your regression test. You can also run the regression test in some of these intermediate releases to determine in which version the errors start. If you wish to investigate the errors further, or do not have access to the beta releases, please contact Source support.

Updating regression tests

If the regression test reveals that your results have changed, and after troubleshooting you are satisfied these changes are intended (eg. caused by known software improvements), you can overwrite the baseline data saved with your regression test by enabling Overwrite Results and then clicking Run again. This will update your regression test to the version of Source you are currently using for testing.